SpecDriven AI: Combining Specs and TDD for AI-Powered Development

How precise specs turn AI into a reliable coding partner.

How executable specifications and rigorous testing create reliable AI-assisted software.

Years of championing Continuous Integration, Continuous Delivery, DevOps, Test-Driven Development, and Infrastructure as Code taught me something fundamental: the practices that make software development predictable and reliable don't change—they evolve. Today, as AI agents become part of our development workflow, we need to extend these proven practices to ensure AI generates exactly what we intend.

I've been experimenting with an approach that does just that. I call it SpecDriven AI, and it's not a replacement for the practices we know work—it's their natural evolution for the AI era.

The Real Problem with AI-Assisted Development

Here's what I've observed after months of working with AI agents like Claude Code: they're incredibly capable, but they're only as good as the context we provide. Give them vague specifications, and you'll get vague implementations. Give them precise, traceable specifications backed by executable tests? That's when they become force multipliers.

I'm using Claude Code for this project, but the principles apply to any agentic coding tool—GitHub Copilot, Cursor, Gemini CLI, or whatever comes next. The key insight remains the same: precision in specifications leads to precision in implementation.

But here's the thing—this isn't just about AI. The same precision that makes AI effective also makes human developers more productive. When every line of code traces back to a specific specification, when every test explicitly states which specification it implements, software development becomes predictable and repeatable.

What SpecDriven AI Actually Is

SpecDriven AI synthesizes three proven practices:

- Machine-readable specifications using OpenAI's Model Spec pattern

- Rigorous Test-Driven Development (and yes, TDD is non-negotiable here)

- AI-powered implementation with persistent context

I recently explored using ATDD/BDD via Gherkin language for AI development. While powerful, that approach requires writing specs in the controlled "Given-When-Then" syntax. SpecDriven AI offers more flexibility while maintaining the same rigor.

Here's what makes it different:

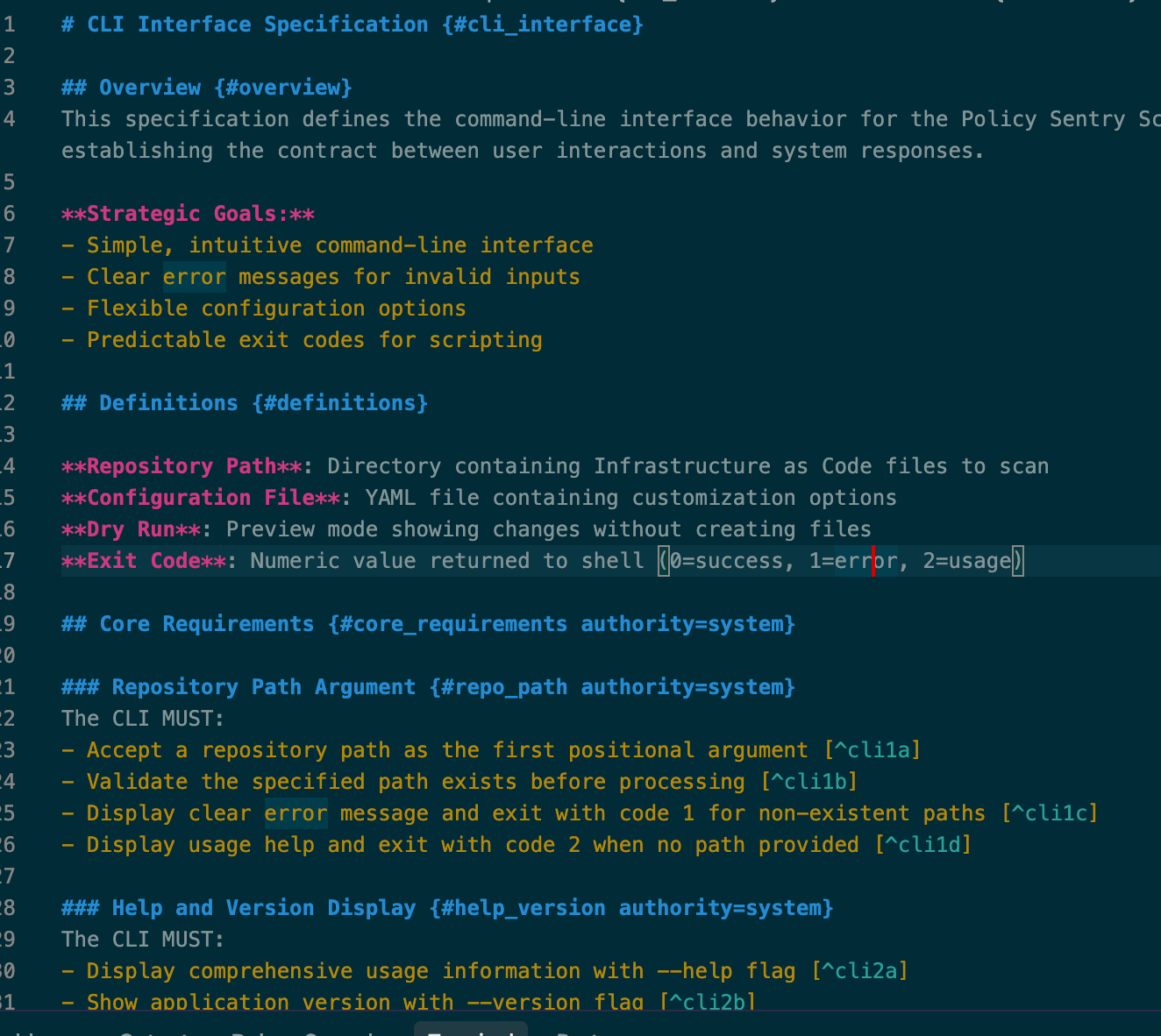

### Repository Path Argument {#repo_path authority=system}

The CLI MUST:

- Accept a repository path as the first positional argument [^cli1a]

- Validate the specified path exists before processing [^cli1b]

See that [^cli1a]? It's not just documentation—it's a traceable link to a specific test that validates that exact specification. This creates an unbreakable chain of accountability from specification to test to implementation.

From Theory to Practice: IAM Policy Generator

Let me show you how this works in practice. I'm applying SpecDriven AI to IAM Policy Generator, a tool that scans repos and IAM usage to automatically generate least-privilege IAM policies. The tool analyzes Infrastructure as Code files to discover IAM roles, then generates secure policies using both static analysis and dynamic tracing (by analyzing GitHub Actions workflows). Here's the actual workflow:

1. Specification-First Architecture

Before writing any code, I define exactly what each feature should do:

### Repository Path Argument {#repo_path authority=system}

The CLI MUST:

- Accept a repository path as the first positional argument [^cli1a]

- Validate the specified path exists before processing [^cli1b]

- Display usage help and exit with code 2 when no path provided [^cli1c]

[^cli1a]: specs/tests/test_cli_interface.py::test_accepts_valid_repository_path

[^cli1b]: specs/tests/test_cli_interface.py::test_validates_repository_path_exists

[^cli1c]: specs/tests/test_cli_interface.py::test_requires_repository_path_argument

Every specification gets a unique identifier and an explicit test reference. No ambiguity. No guesswork.

2. AI Reads Machine-Readable Specs

When I prompt Claude Code (or any agentic coding tool) with these specifications, it understands not just what to build, but exactly which test should pass. The unique identifiers and authority levels provide critical context that eliminates the back-and-forth typically required with AI agents.

Whether you're using Claude Code, Windsurf, Gemini CLI, or the next generation of AI coding tools, machine-readable specifications transform them from code generators into reliable development partners.

3. Test-Driven Implementation (RED-GREEN-REFACTOR)

Every feature begins with a failing test that references its specification:

def test_accepts_valid_repository_path(self):

"""Test: CLI accepts valid repository path argument

Implements: specs/specifications/cli-interface.md#repo_path [^cli1a]

GIVEN a valid directory path

WHEN the CLI is invoked with that path

THEN it should return exit code 0 (success)

"""

from policy_sentry_scanner.cli import main

with tempfile.TemporaryDirectory() as temp_dir:

result = main([temp_dir])

assert result == 0

Notice how the docstring explicitly links to the specification? This isn't busy work—it's what enables both humans and AI to understand the system's architecture.

4. Comprehensive Test Execution

The project uses a comprehensive test runner that handles all the complexity:

# Run all specification tests

./run_tests.sh

# Output includes:

# - Virtual environment setup

# - pytest execution with coverage

# - HTML coverage reports

# - Clear pass/fail status

No manual environment setup. No forgotten dependencies. Just reliable, repeatable test execution.

5. Dual Coverage: Code AND Specifications

SpecDriven AI extends traditional coverage tracking by measuring two complementary types of coverage:

Code Coverage (pytest-cov)

# Measures which lines of implementation code are executed

./run_tests.sh

# Generates:

# - Terminal coverage report with missing lines

# - HTML coverage reports in htmlcov/

# - .coverage database for analysis

Specification Coverage (spec_validator.py)

# Validates every specification has corresponding tests

python specs/compliance/spec_validator.py

# Generates: specification_compliance_report.md

# Current: 498 specifications, 100% specification coverage

This dual approach ensures:

- Every line of implementation code is tested (traditional coverage)

- Every specification in the specs has a corresponding test (specification coverage)

- No orphaned tests without specifications

- No missing implementations for specified specifications

The coverage workflow is fully automated:

run_tests.shexecutes pytest with--cov=src --cov-report=htmlspec_validator.pymaps all footnote references to test implementations- Pre-commit hooks in

.claude/commands/acp.mdenforce both coverage types - Results stored in

.coveragedatabase andhtmlcov/for visualization

This isn't about hitting arbitrary percentages. It's proof that we've implemented everything we promised, and tested everything we've built.

6. Traceable Git Workflow

Every commit traces back to a specification:

git commit -m "feat: implement repository validation via TDD (^cli1b)

- Add failing test for path existence validation

- Implement minimal validation logic

- Display appropriate error messages

Implements: specs/specifications/cli-interface.md#repo_path [^cli1b]"

This creates a development history where you can trace any line of code back to its original business specification.

Authority Levels: A Critical Innovation

Not all specifications are created equal. The authority level system provides explicit boundaries:

- authority=system: Core specifications that MUST not change

- authority=platform: Platform-specific specifications with some flexibility

- authority=developer: Developer-configurable features

This tells both AI agents and human developers exactly where they have flexibility and where precision is mandatory. It's the difference between "the system should probably validate inputs" and "the system MUST validate inputs according to this exact specification."

The AI Amplification Effect

When you combine precise specifications with AI assistance, something remarkable happens. I've watched AI agents:

- Catch specification gaps before I write a single test

- Suggest edge cases I hadn't considered

- Generate implementations that maintain consistency across thousands of lines of code

- Remember context across multiple development sessions

But—and this is crucial—this only works with machine-readable specifications and traceable tests. Without that foundation, you're just hoping the AI guesses correctly.

Key Learnings from Real Implementation

After applying SpecDriven AI to IAM Policy Generator, here's what actually matters:

Automated Tooling is Non-Negotiable

Manual processes don't scale. You need:

- Spec validator: Instant feedback on specification coverage

- Test runner: One-command test execution with coverage

- Pre-commit hooks: Prevent incomplete implementations from polluting the codebase

Test Structure Drives Quality

This isn't about test coverage percentages. It's about:

- Explicit traceability: Every test knows which spec it validates

- Clear boundaries: Tests validate behavior, not implementation

- Fast feedback: Sub-second test execution keeps you in flow

AI Context Preservation Actually Works

With proper structure, AI agents can:

- Resume work after days or weeks

- Understand the entire system architecture

- Generate consistent implementations

- Suggest improvements based on existing patterns

Real Metrics That Matter

From the current IAM Policy Generator implementation:

- 498 specifications → 498 passing tests

- Zero orphaned tests (tests without specifications)

- Zero untested specifications (specifications without tests)

- 100% automated validation (no manual checking required)

Getting Started with SpecDriven AI

Want to try this? Here's your roadmap:

1. Start with Structure

mkdir -p specs/{specifications,tests,compliance}

mkdir -p src docs

touch CLAUDE.md run_tests.sh

2. Write One Specification

Pick your simplest feature. Write it clearly:

### Feature Name {#feature_id authority=system}

The system MUST:

- Do one specific thing [^feat1a]

[^feat1a]: specs/tests/test_feature.py::test_one_specific_thing

3. Write the Failing Test

def test_one_specific_thing(self):

"""Implements: specs/specifications/feature.md#feature_id [^feat1a]"""

# This should fail

assert False

4. Make it Pass

Implement the minimum code to make the test green.

5. Validate and Commit

Run your validation tools. Commit with the footnote reference.

6. Repeat

Build your system one traceable specification at a time.

Why This Matters Now

We're at an inflection point. AI agents are becoming incredibly capable, but without structure, they're just faster ways to create the same old messes. SpecDriven AI provides that structure while making human developers more effective too.

The results speak for themselves:

- Faster development: AI agents understand specifications instantly

- Higher quality: Every specification is tested

- Better maintenance: Complete traceability from specification to code

- Confident refactoring: Comprehensive test coverage

What I've Learned

SpecDriven AI isn't about replacing human judgment with AI automation. It's about creating a development process where precision enables productivity. Where specifications guide implementation. Where tests prove correctness.

The future of software development isn't AI-first or human-first—it's precision-first. And precision requires specifications, tests, and traceability.

That's what SpecDriven AI delivers. Not as a promise, but as a practice I'm using every day to ship better software faster.

Try it. Start with one specification, one test, one implementation. See what happens when you give both yourself and your AI agent the precision you both need to excel.

What's your experience with AI-assisted development? Have you found patterns that work? I'd love to hear about your journey—reach out and let's compare notes.